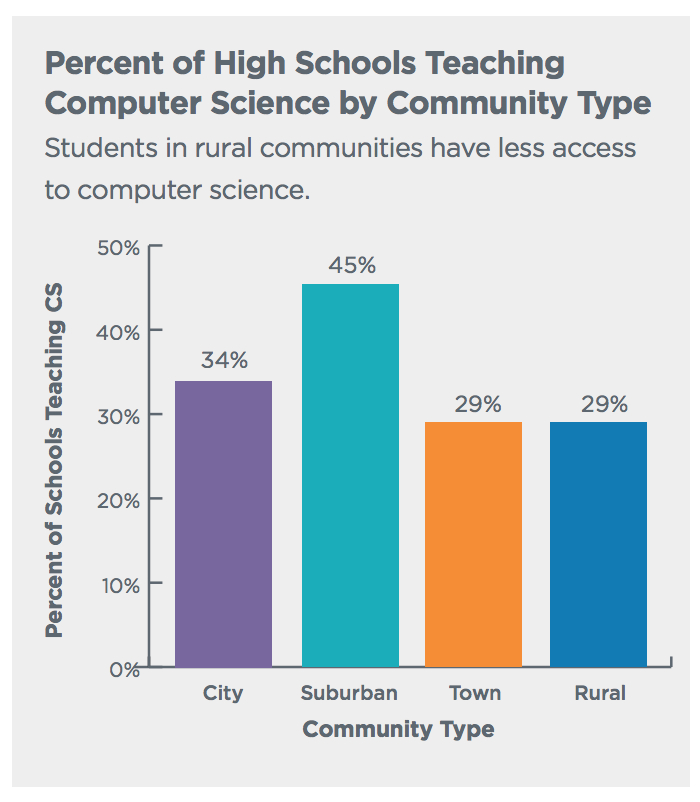

The Code.org Advocacy Coalition recently released a report called “The State of Computer Science Education:…

Author: Chris Sanders

I’m an avid reader and go to extra effort to take notes about things I find…

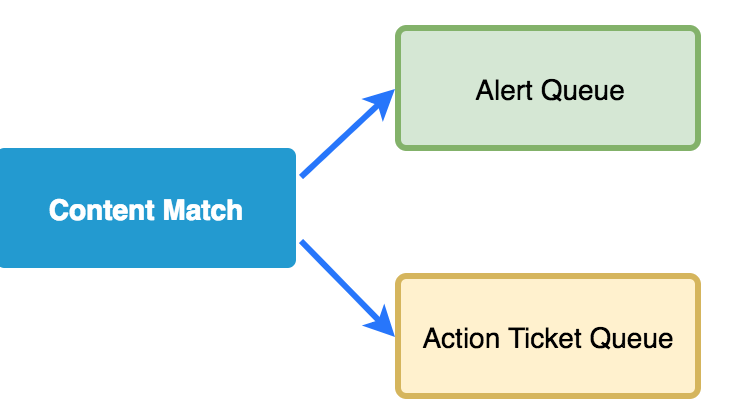

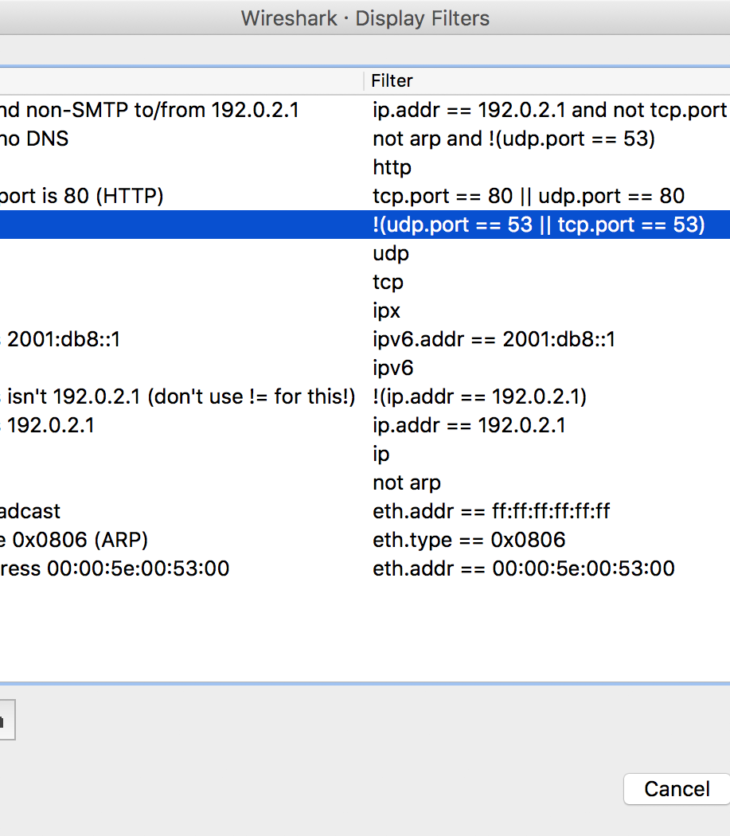

A simple content match provides the launching point for many of our investigations. You write…

As an analyst acquires experience investigating threats they will naturally gain mastery of evidence. This…

Whittling is a lost art, but it’s a beautiful process. A craftsman chooses a lifeless…

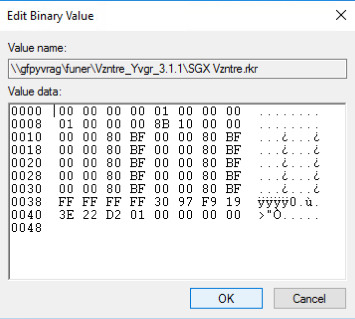

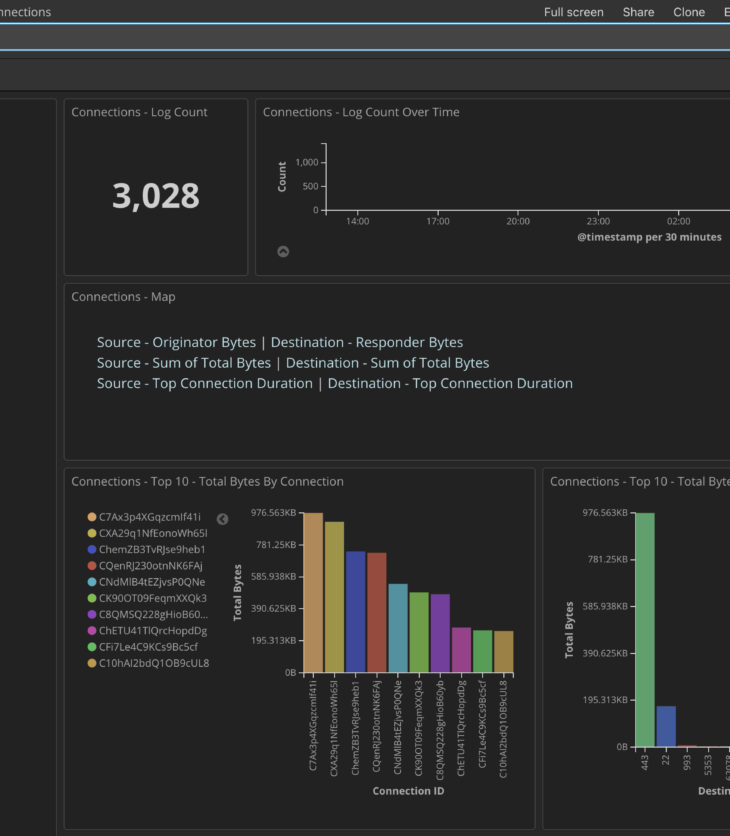

There’s nothing more frustrating than knowing the answers you need lie in a mountain of…

If you’ve eaten stew, drank whiskey, or put gas in your car then you’ve been…

I’m bringing my Investigation Theory course to Charlottesville, VA on June 19th and 20th. This…

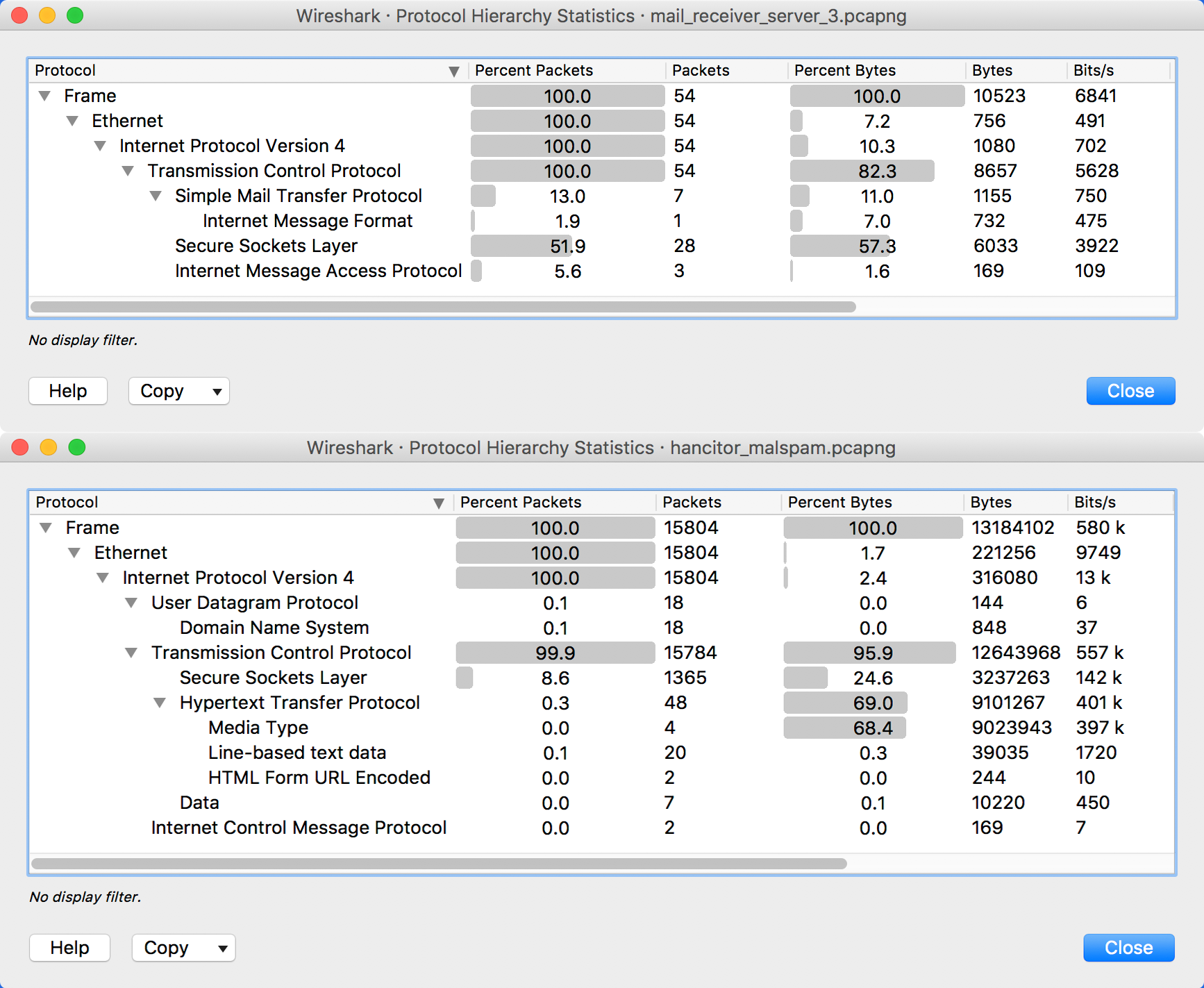

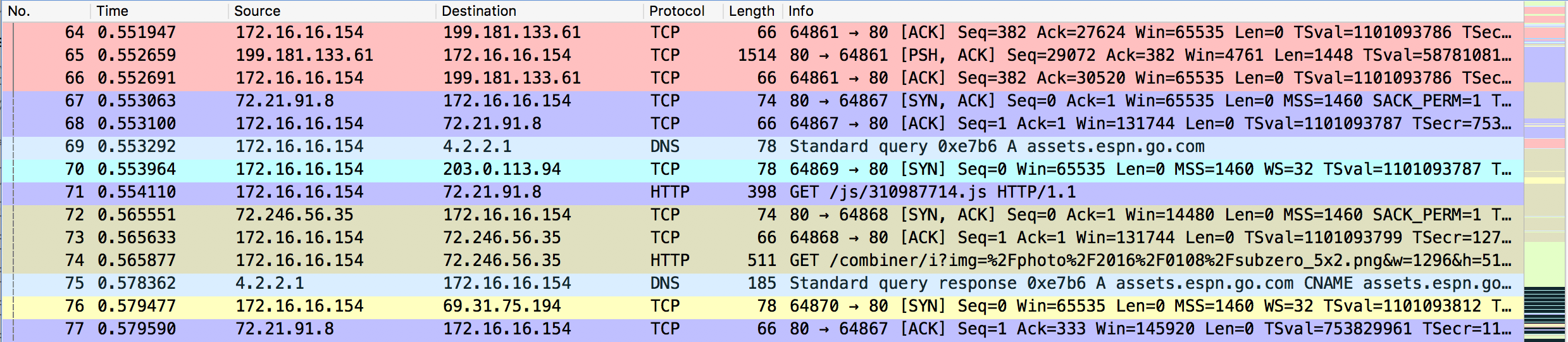

In addition to the packet colorization technique, the first article in this series discussed the…

Overwhelmed. That’s how nearly everyone would describe their first experience with packet analysis. You fire…