Most cyber security educators are practitioners first and educators second. While this model ensures learners…

Category: Psychology

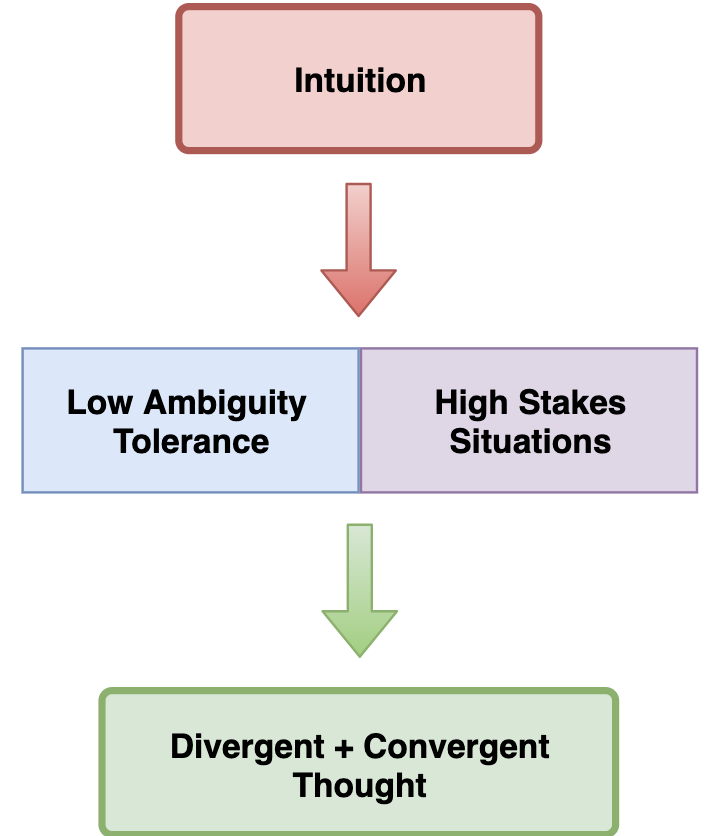

Creative Choices: Developing a Theory of Divergence, Convergence, and Intuition in Security Analysts

Humans lie at the heart of security investigations, but there is an insufficient amount of…

The typical answer to someone who asks how they can break into information security is,…

Unwritten social contracts dictate many of the rules of modern employment between the employer and…

I’ve argued for some time that information security is in a growing state of cognitive…

As an analyst acquires experience investigating threats they will naturally gain mastery of evidence. This…

Today I want to talk to you about forcing decisions and how you can use…

When I moved to Georgia I started riding my mountain bike several times a week.…

Would you perform your job if you weren’t paid? That’s the question people are often…

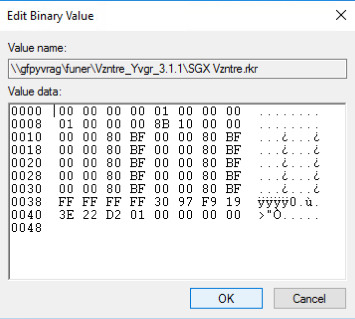

This is part three in the Know your Bias series where I examine a specific type…