In the realm of network security monitoring and intrusion analysis we are all slaves to our data. Typically speaking, we rely on two different types of data at the network layer; full content data (PCAP) and session data (Netflow). Both are pretty easy to generate given the right sensor placement, and there are a lot of great resources out there for learning how to get good value out of the data. That said, they do each have their own shortcomings as well.

Session Data (Netflow)

Netflow is a standard form of session data that details the ‘who, what, when, and where’ of network traffic. I tend to equate this to the call records you’ll see on your monthly cell phone bill.

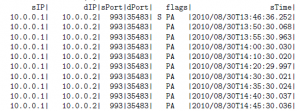

Figure 1: Partial Netflow Records Exported from SiLK

The best thing about netflow is that it provides a lot of value with minimal disk storage overhead. It’s really a lot of bang for your buck. Most commercial grade routers and firewalls will generate netflow, and there are a lot of free and open source tools, such as SiLK, that can be used to generate and analyze netflow as well. There is even a yearly conference called FloCon where people get together and talk about cool things you can do with netflow. The only real downside to netflow data is that it doesn’t paint a complete picture, so it’s often best used as a complement to full content data.

Full Content Data (PCAP)

If netflow session data is equivalent to a call log, then full content data in the form of PCAP is just like having a full recording of all of your calls.

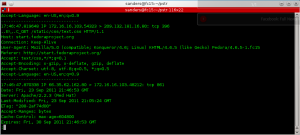

Figure 2: PCAP Data Investigation with Wireshark

The PCAP format has become very universal and can be collected and analyzed with a variety of free and open source applications like Dumpcap, Tcpdump, Wireshark, and more. A lot of the more popular intrusion detection systems, such as Snort, use the PCAP format as well. As an analyst, having PCAP data available tends to make the analytical process a dream come true as it provides the highest level of context when investigating an anomaly. The primary downside to full content data is that it has an incredibly high disk storage overhead, which prevents most organizations from collecting and storing any reasonable amount of it. In my experience, the organizations that are capable of collecting and storing PCAP can only measure the amount stored in hours, rather than days. In addition to this, unless you have an idea of what you are looking for within a reasonable time range, it can be a bit difficult to locate things as well, somewhat impeding flexibility in analysis.

Application Layer Metadata

The concept of application layer metadata originally presented itself to me in a discussion regarding additional data types that are useful within the network security monitoring function that were sort of a happy medium in between session data and full content data. It didn’t take a lot of number crunching to find that on most of the networks we monitored, the vast majority of the traffic was the application layer data of a few common protocols. The largest of these was HTTP, followed by the other usual suspects; SSL, DNS, and SMTP.

Starting with a couple of these protocols as a baseline, we quickly realized that we could save ourselves a lot of disk storage overhead by actually eliminating the stuff we didn’t need. There are an unlimited number of ways to do this, but we wanted to go with the keep it simple philosophy, so we started by using tcpdump to read in our PCAP data, outputting the ASCII formatted data to a file. Then, we ran the Unix strings command on that file to get read of any binary data that we couldn’t read anyways. We weeded out a few more things that we didn’t want through a magical combination of SED and AWK, added in the appropriate timestamps, formatted the data a bit prettier, and we had achieved our goal.

The end result of a reasonably small bash script was the ability generate application layer metadata in the form of something we call a Packet String, or PSTR file (pronounced pee-stur). The script is ideally designed to run as a cron job where it parses continually generated PCAP files in order to generate accompanying PSTR files.

Figure 3: Sample PSTR Data

You can download the bash script that generates this data from PCAP files here. This is provided as a simple proof of concept and takes an input PCAP file and generates an output PSTR file. Now that we’ve got application layer metadata being generated in the form of PSTR files, let’s take a look at a few use cases.

Using PSTR as a Data Source

The original goal of generating PSTR files was to provide a data format with a low disk storage overhead that provided value to analysts as a secondary NSM data source. In a typical workflow, analysts would take an input from a detection capability, such as an IDS, and then PSTR would be another data source available to the analyst in order to provide supporting evidence in the analysis of a potential event or incident. I’ve written a few use cases here. Some of these are theoretical, but others are examples of actual things that have happened since implementing the PSTR data type.

Malware Infection Use Case

Let’s look at an example in which we’ve just received an alert from our IDS stating that an internal system has been detected as exhibiting symptoms of infection. The signature that fired did so because it saw a malicious GET request associated with a known botnet C2 server. The host was examined, and it appeared as though the GET request matches what is expected as a result of the signature that fired, so were able to determine with a pretty reasonable certainty that this box was infected.

Upon closer examination, we also notice that the infected host was also sending an HTTP POST with a very unique string. This looked like it might be an indicator of malicious activity, but it wasn’t something that any of your existing signatures fired on. In this case, an analyst was very quickly able to use GREP to quickly find other instances of this same string within the HTTP header data of all traffic on our monitored networks. PSTR data proved to be incredibly useful in finding other infected boxes across multiple networks.

Targeted Phishing Use Case

As a theoretical example, consider another example where several users have contacted your security team because they’ve received a very suspicious e-mail that seems to be targeted specifically at your company. This e-mail mentions a payroll adjustment and asks the client to access the provided link and log in with their employee ID number and password.

After examining the e-mail, you’ve determined that it has been sent from a spoofed e-mail address and that it uses a slightly modified subject line that is unique to each recipient. You’ve also noticed that based upon the reports you’ve received from users, these e-mails have come in over the past several weeks. One of the things you would want to do in this case would be to find who within your organizations received this e-mail. The purpose of this is to be able to warn the users not to click the link in the e-mail and also in hopes that you might be able to find a pattern as to why the selected recipients were chosen (access to certain systems, high profile employees, etc).

Typically, you might search through Exchange or Postfix logs to see if you can find who the recipients were. This of course relies on your organization having adequate logging and retention of those logs. The unique nature of the string however, makes it difficult to query these data sources. Using PSTR data, you can write a quick regular expression to match the semi unique subject lines and run a very quick query that will give you these results.

Using PSTR as a Detection Capability

The one thing we didn’t really anticipate when we created the PSTR file type was its use as a second level detection capability. When I refer to second level analysis and detection, I’m referring to moving past near real-time detection to the point in which analysts start reviewing traffic retrospectively to find things that signatures don’t catch. This often involves statistical and anomaly based detection with large data sets. This is something PSTR is perfect for.

User Agent Use Case

The user agent field within an HTTP header is always a good source for catching the low hanging fruit when it comes to malware infections on a network. Lots of malware will use a custom value in this field that deviates from standard browser identifying strings. The detection technique I’ve seen most commonly deployed to catch these types of malware infections at the network level rely on IDS/IPS signatures. As a matter of fact, if you subscribe to the common popular Snort rule sets then probably are using their user agent rules to detect known bad user agents.

The only problem with that detection scenario is that malware is now being generated at a rate much faster than the AV and ISD companies can keep up with. As a result, there are a LOT of malicious user agents out there that aren’t accounted for. In addition to this, some malware uses randomly generated user agent strings, meaning it’s much more difficult to write adequate signatures for detection.

One day, one of our analysts started playing around with PSTR data and wrote a quick script to parse all of the PSTR data for a given site, grab all of the user agent strings, and sort those by uniqueness. As expected, there were thousands of occurrences of the typical Firefox and Internet Explorer user agents, but what was really interesting was that there were several user agent strings only seen a handful of times that didn’t correlate to any particular known browsers. After a bit more analysis, we ended up finding quite a few machines that were infected with malware variants using these custom user agent strings. This one was a home run.

E-Mail Subject Use Case

The previous use case got us to thinking about other common fields with application layer metadata that we could do the same types of analysis on. One such field was the e-mail subject line field. We modified our user agent parsing code to look at all PSTR data related to e-mail subject lines, and again had some very cool results.

Instead of most of the distribution being focused on one or two unique strings like we saw with user-agents, we saw that the distribution was spread very widely across thousands of different subject lines. This was expected, since most e-mails have a unique subject line. What interested us here however, was that we had a few subject lines that were used in excess. The first item of interest we found was that some sites had misconfigured applications that were mailing things to places they shouldn’t go, which was worth pursuing and getting fixed. We also found a user who was e-mailing all of his work documents to himself as a scheduled task every night, which was a policy violation.

This was all found with a very basic level of analysis, and it had some very real and useful results.

Additional Analytic Capabilities

The thing I love about this data format is that we can store a lot of it and it’s really quick to search through. With those things being true, there are a ton of things that can be done with it from a detection standpoint. A few immediate ideas include:

- Searching for unique values with HTTP, SMTP, DNS, and SSL headers

This is what we did in most of these examples. You can really quickly sort through the unique values within certain fields and find the outliers that warrant additional investigation.

- Byte entropy of certain fields to locate encrypted data where it shouldn’t be

It’s a common tactic to exfiltrate encrypted data through commonly used channels in an effort to hide in plain sight. Performing entropy calculations on GET and POST requests in an effort to find encrypted data would be a good way to detect where this might be occurring.

- Checking the length of certain fields for anomalies

You can do some statistical analysis and determine that certain fields will often have values that have a length falling in a particular range. Using that, you can flag on outliers that are far too short or too long in order to look for anomalies. I’ve seen good success in doing this with the various HTTP header fields, e-mail subject lines, and SSL certificate exchanges.

- Enumerating Downloads of Particular File Types

There is a great deal of value to being able to list all of the executables or PDF files downloaded within a certain time span. This is pretty easily achievable really quickly with analysis of HTTP header data within PSTR files.

Of course, all of these things CAN be done on PCAP data as well, but it would take significantly more processing power and it’s likely that you can’t store enough PCAP data at a given time to make it worth useful.

Conclusions

The concept of collecting and storing application layer metadata isn’t anything revolutionary. As a matter of fact, the idea isn’t even completely original as I’ve encountered other organizations that do similar things. There are even some commercial products that do this as well. However, I do know that nobody is sharing their methods and code with the world, which is the purpose of this post. Analysts live and die by their data feeds, and I think application layer metadata in whatever form it takes has its place amongst the other primary network data types. You can download the proof of concept code to generate and parse PSTR files here. I’m excited to see this data format evolve as we find more and more use for it. Look for more updates on this front as the code base continues to advance.

* A special thanks to my colleague Jason Smith for doing most of the legwork on writing the POC code.