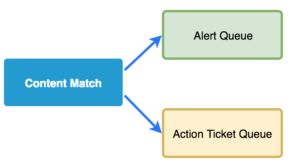

A simple content match provides the launching point for many of our investigations. You write a signature, it matches something, that generates an alert, and we investigate the alert to determine if something malicious actually happened. This workflow is so common that we tend to consider matching and alerting to be synonymous. This is problematic because it limits all the other great paths that can be taken following a match.

In this post, I’m going to discuss how matching can be combined with other functions to benefit security analysts.

Content Matching

Content matching in the essential component of search. You provide content and use a tool to sift through data to determine if that content exists within it. Manual content matching is a fundamental process for investigations enabled by tools like SIEM, EDR, and log aggregators. Not all content matching is manual, however.

Instead of selectively searching through data sets, we also have software capable of constantly looking for content matches as data comes into existence. Most organizations rely on automated content matching in the form of intrusion detection systems that constantly evaluate data from the network or host. It’s this automated matching its various forms that I’ll focus on in this article.

Matching Outputs

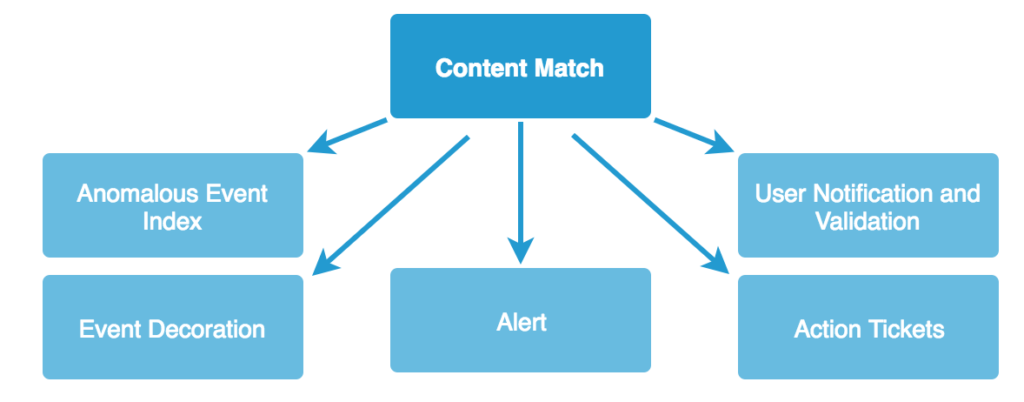

Instead of conceptualizing automated content matching and alerting as the same function, consider them individual pieces in a pipeline. Content matches are sent to the next step in the pipeline, which could involve alerting, anomalous event indexes, decoration, user notification, action tickets, or something else.

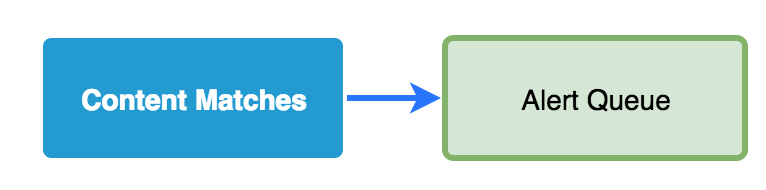

Alerting

The most common output of matching is an alert. An alert places the content match into a queue for human analysts to triage and investigate. Most think of their SIEM as that queue, but the alert queue is simply a single function of most SIEMs and can exist as a standalone capability (like what is offered by Sguil or Squert).

Anomalous Event Indexes

If you only pursue content matches that are immediately actionable for detection, you miss much of the value content matching provides. There are plenty of things that are interesting to match that become useful in the context of the investigation without necessarily being alert-worthy. Consider the following examples:

- A friendly system communicated using a user agent that isn’t on your whitelist of known good UAs.

- A friendly system communicated with an IP on a low-confidence blacklist.

- A user account logged into the VPN from a location they’ve never logged into from before.

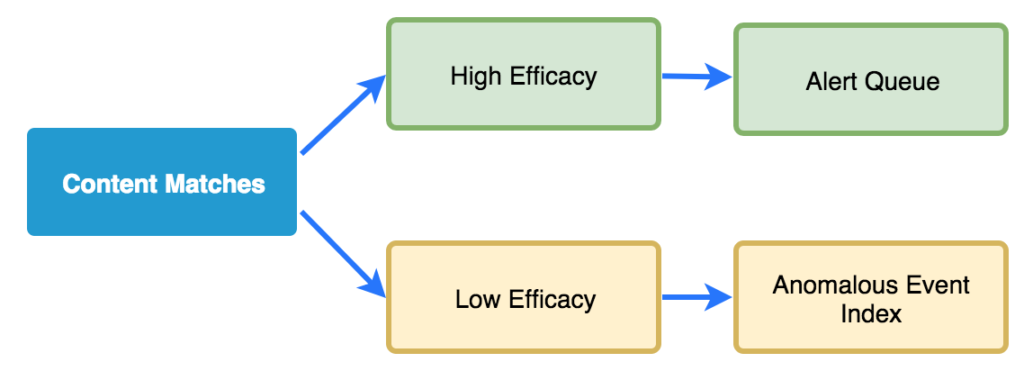

I wouldn’t want to alert on each of these scenarios because I’d be flooded with legitimate activity. When pairing content matches with alerts, we generally strive for a high probability of malicious activity. Let’s say 99% for the sake of round numbers. In the list above, each of these scenarios is much less likely to lead to malicious activity, but they do still represent some degree of anomaly, perhaps 10-20%. This degree doesn’t warrant launching an investigation on its own, but it does become useful in the context of another investigation when you’re trying to determine if something malicious has occurred. A system or user can also come into suspicion if several of these lower efficacy matches occur together in a compounding effec.

In practice, these lower level context matches can be sent to secondary queues, or to an indexed event store like an Elastic/Splunk index. These events must be

Event Decoration

In addition to sending lower efficacy events to a dedicated searchable index, this information can also be added to other events to provide context. Combining additional information to existing events is typically called decoration or enrichment. Software that edits events inline or retroactively facilitates decoration.

As an example, consider an investigation where you’re reviewing Windows event logs to determine what applications executed following suspicious network activity. You eventually come across an event representing an application you’ve never seen before. Along with the typical Windows event information, you also see a field that has additional tags that were decorated onto the event. Things like:

- NON-STANDARD: The application isn’t on the standard image for your network.

- BLACKLIST: The file hash appears on a blacklist obtained from a vendor or OSINT source. There’s not enough context from the list to alert on this input directly, but the information is useful in the investigative context.

- NEVER-BEFORE-SEEN: This application has never been seen on the network before (executed on the host or transferred over the network).

- NEW-FOR-USER: This application has never been executed by this user before.

Each of these context items changes how you view the event you’re examining and will direct the next steps in the investigation.

Unfortunately, I don’t see enrichment/decoration used too much in practice. Some of this is because practitioners don’t consider the option, but most of it is because most content matching tools don’t support it. Those that do are generally solutions with bundled content matching and event storage. There exists an opportunity for more vendors and open-source developers to tackle this functionality.

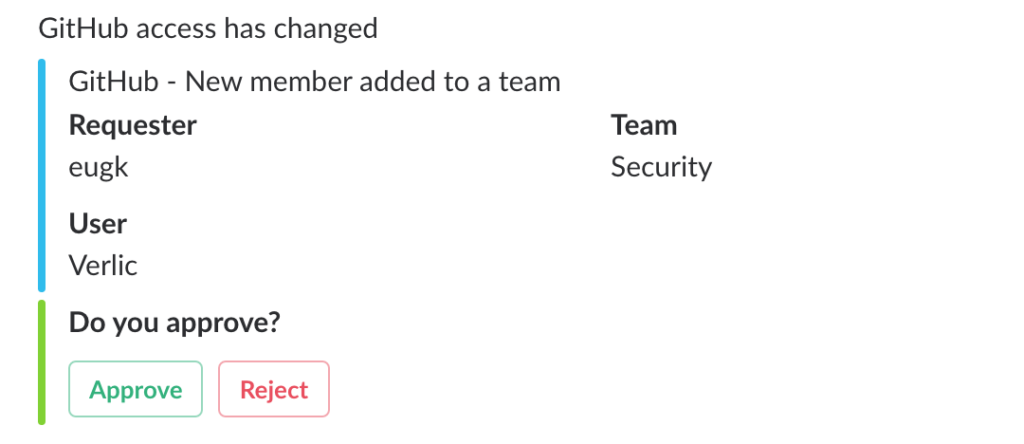

User Notification and Validation

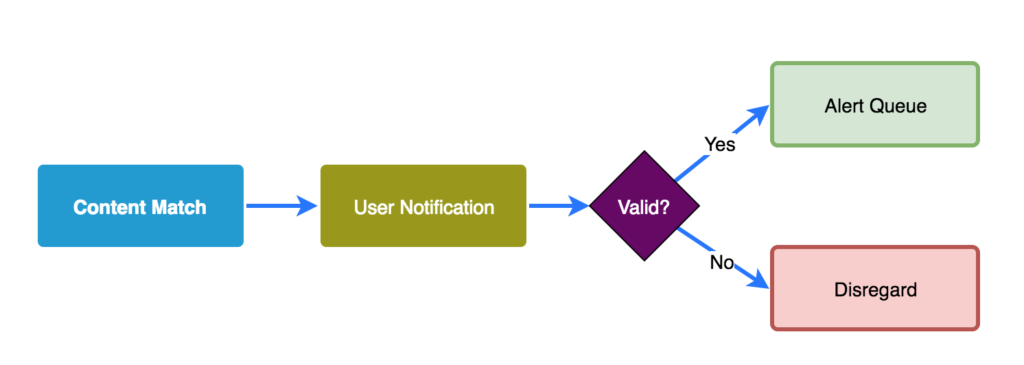

Many of the things we choose to match are because they represent an action that is legitimate if done intentionally by a system owning user, but malicious if done by anyone else. When you get alerts based on those matches most your investigation revolves around proving the legitimate user performed the action, which may even include contacting that user. There are several scenarios where this comes up:

- An authentication from a location that’s never been observed, or from a location geographically distant from another recent authentication.

- A series of failed login attempts following by a success.

- The installation or use of remote administration tools (VNC, RAdmin, PSExec, etc).

You can take these content matches and use them to bring the end user into the loop. This takes some of the investigative

The most effective implementations of this strategy I’ve seen involve sending content matches relevant to individual users to them via shared chat applications like Slack. The content match is given to the user and a feedback mechanism allows them to denote whether they are responsible for the observed event. If they respond negatively or if they don’t respond in an acceptable time frame, the content match is escalated to an alert queue.

Source: https://auth0.engineering/cloud-security-monitoring-at-auth0-part-ii-b106354a0e5d

Any time you rely on an end user like this the process, of course, fallable. There are checks and balances that must be built in, but I’ve seen this become a reliable augmentation to detection and investigation processes.

Action Tickets

A few of the investigations you’ll work require early actions that must be taken by analysts, IT, or end users to limit the impact of compromise or infection. In some cases, you can actually pinpoint specific alerts that will always lead to action. This might be things like:

- Removing additional copies of phishing e-mails from other mailboxes once one has been discovered.

- Forcing a password reset when credentials are observed in the clear.

- Quarantining or isolating devices that execute known malware.

When events like these occur you can feed your content matches directly into the ticketing system used to manage these tasks. This will only apply to some context matches and may require an additional verification before the action is taken, but that can usually be facilitated by the ticketing system itself.

Since most organizations use a ticket system, this is relatively achievable when using content matching tools that can export specific events in a parseable format.

Conclusion

In this post I’ve described how separating the functions of content matching and alerting can enable additional functionality that benefits the analyst. A few of these pathways for content match data include low-efficacy anomalous event indexes, event decoration, user notification and validation, and action tickets.

Your ability to take advantage of any of these techniques is dependent on your toolset, whether commercial or home-grown. If you’re a network operator, these abilities can inform the tools you deploy in your network. If you’re a vendor, they can inform your product roadmap and how you provide additional value derived from content matches.

While most of what I’ve discussed here is tied to basic content matches, much of it can also be applied to outputs from other forms of detection as well, like statistics. Once you separate detection functions from their output, a lot of useful avenues open up.

Interesting in becoming a better investigator or leveling up your toolbox? Check out my online training classes here.